Logging into services on computers didn’t suck for a beautiful, brief period of time in the 1960s. Then some jerk somewhere did something rude and passwords were invented. Ever since that moment, logging in to stuff has basically sucked.

Computer people call it “Authenticating”, by the way, and it’s pretty much what it says it is. If I say my name is “Clint Ecker’’, you can authenticate that claim by asking me to produce a valid government-issued identification card (really anything you’d be satisfied with).

But how do you prove you are who you say you are… on the Internet? You could upload a photo of a valid looking government-issued identification card but that would be difficult to authenticate, and a little annoying to do every time you need to login.

Historically, online services have allowed each user to select a super-secret phrase, word, or just sequence of characters. The server will store that sequence of characters somehow, and when someone comes around claiming to be “clintecker”, the server can challenge them to produce the secret phrase. We know these phrases as “passwords” or “passphrases”, and there is a long tortured history of people writing them down, picking common words, losing them, and accidentally sharing them with nefarious individuals. There’s an equally long and tortured history of entities and corporations not securing this information and/or allowing for criminals to steal and exploit them.

The last thirty to forty years (maybe all of it?) of computers have borne witness to many fits and starts at making the identifier and password system easier to use and harder to abuse. Unfortunately, while almost all the approaches have made users safer, they have almost always increased friction to the user. Adding additional factors–second and third companions to the venerable password, forcing new passwords on a schedule, and enforcing arcane complexity rules undoubtedly makes those who don’t go insane, safer.

Bad actors eventually just move on and invent entirely new approaches for stealing, scamming or pilfering second and third factors out of users.

It’s someone else’s problem!

Many entities simply gave up and delegated the entire category of authentication to third parties. Companies like Facebook, Microsoft, Apple, and Google all operate identity providers in the form of an account linked to that corporation. Many folks have built open-source protocols like OpenID and OpenID Connect. There are entire businesses built on top of these sorts of things in an attempt to help individuals and small companies operate their own identity providers.

Most services still build systems where you login directly to their service using:

-

An identifier: a username, email address, phone number + some kind of password.

-

An external identity that delegates authentication to some third-party: Google, Facebook, Apple.

If you’re lucky, they might also let you link a 2-factor (TOTP) application. Usually if they are using your phone number as an identifier, the 2nd factor will be the ability to receive text messages at that number (essentially delegating identity to the telephone system).

If they’re using an email address as an identifier, you can sometimes get a secret login link sent there and if you know the credentials for that email address, you can obtain that login link (delegating identity to some email server operator somewhere).

There are a number of disadvantages with all of these approaches:

-

Your identity—whether managed directly by the service or if it’s delegated to Facebook, Verizon, or Gmail—they are all operated by some other entity whose interests may align with yours most of the time, but not all of the time. Your account can be turned off at any time, for any number of reasons, and your identity will go along with it.

-

If you choose to operate your own identity provider, maybe you run an OpenID server on your own domain, you’re kind of screwed because almost no public services or apps allow any old generic OpenID provider.

-

You can operate your own domain and email server, but then you have to administer an email server and protect it with your life. You also have to figure out DKIM, SPF, and DMARC, good luck!

-

If the service isn’t delegating authentication to another party, you are storing a password with another entity that you honestly know nothing about.

-

You have to hope they keep that password safe by hashing and salting it and throwing away the plain-text version.

-

You have to hope they don’t get hacked and someone doesn’t just insert some code into the authentication flow and just steal all the passwords.

-

Conversely, you too must keep the password safe, make sure it’s sufficiently complex, and you have to not re-use it anywhere.

-

-

You have to hope you don’t operate a sufficiently popular account somewhere and become a target for SIM jacking or other plots to trick you into divulging your additional factors (two-factor texts, TOTP codes, etc). Many times these can be used to reset the password on your accounts.

So why not use Cryptography to login?

Cryptography is all about proofs and also proof. There’s also a special type of cryptography named asymmetric, or public key cryptography that is all about providing a cryptographic identity system. That identity system is the public part of your key, commonly just called the public key.

This is the same public key you’d put into an ~/.ssh/authorized_keys file for your SSH daemon, the same public key you might upload to your github account, and the same key you might use to digitally sign a message to prove your identity… that last bit being especially important.

In fact, using asymmetric keys to SSH into your servers is exactly this. Your server has a list of Authorized Keys, or identities which are allowed to remotely connect as a particular user. When you connect, you use your private key to prove you own the identity associated with one of those keys.

SSL/TLS bootstraps itself by each party (your browser & the server) exchanging public keys and using them to encrypt some random data. This random data is then used to derive a “session key”. This key is exchanged on the lower asymmetric key channel, and from that point forth, both parties use a shared key to encrypt traffic.

Sure, all of these things can be technically accomplished using a shared-secret like a passphrase. You could sign or encrypt a message using that passphrase and both you and the other party (website, maybe?) could encrypt and decrypt the data, the issue is that the credentials (password) required to facilitate those operations must be shared in at least two places.

There is a whole other world to talk about with how SSL/TLS Public Key Certificates are supposed to endear some level of trust and comfort because of something called the “Certificate Chain of Trust”. This means the certificate a server is presenting to you should show that it holds a signature from an entity your browser inherently trusts. Every browser has a list of root and intermediary signers, if they end up signing other certificates, confer some level of authenticity. This is because each layer of the trust chain is a registered corporation, who has a motive–profit–in performing as much verification as corporately possible to ensure that they aren’t issuing an SSL certificate for a domain to someone who doesn’t legitimately control it.

Sharing is Bad

With public key cryptography, only public information is used to authenticate a cryptographic signature (also called a Zero Knowledge Proof). No secret information needs to leave your device, only cryptographic proof. If someone comes around saying “Hi I own key 345” then anyone can say “OK, prove it to me, sign the message “this message” with the private part of your key and tell me the signature”.

What is a signature, anyway? Well, it’s the data that’s generated when you run some data along with a key through a certain type of cryptographic function. Ultimately, it produces an encrypted stream of data that can be represented in a bunch of ones and zeros, or a string like “a72fcd82378db”.

You can send this signature out on the internet, paste it on a big wall in your city, wear it on a t-shirt, or use it as your email signature. To everyone else on earth it looks like a random, meaningless string of data.

But the big thing here is that there is a second cryptographic function out there that can accept that signature and the plaintext message (“this message”) and from those two endpoints, “recover” the public portion (the identity) of the key that signed the message.

At this point you might wonder, “isn’t that shared message the ‘shared data’ of this system?” This is true, but there is a very nuanced difference. While that input message must be shared between at least the two parties involved, it can also be completely public. This is the critical difference, wherein the passphrase must be shared, but only between two very specific entities. If that number ever increases beyond 2, the identity is compromised. This is a complete inversion of the power structure in identity.

So even if I gave you the cryptographic signature and the whole world knew the original plain-text, all they could deduce would be the public portion, the identity, of the key that produced the signature and nothing more.

Isn’t this just encryption?

This is tangential, but different from encrypting things. The principles are all the same but things go in the opposite direction.

If you tell me you are “key 256” then I can say, “great, I will encrypt a message to you using the public part of that key. Then I’ll send you the encrypted data.” If the individual (or computer) isn’t being deceptive about which key it owns (its identity), it will be able to use its private key to decrypt and view the plain-text version of the message. If they were merely bluffing, no harm. It’s just a meaningless stream of random data.

The most awesome part is that nothing private was ever shared between the two parties. Passwords weren’t transmitted over the web, or through some socket, or any port anywhere. No unsalted, MD5 hashes loitering in a public S3 bucket. Just math on both ends. The other end doesn’t have to know any kind of password or any secret data at all.

Public Keys are Identities

So that’s all a whole lot of words that boil down to “the Public Key is a very important part of Public Key Cryptography”. And while it is all about identity, identities are cheap and abundant in public key cryptography. You simply generate a new key pair and you’re off to the races under a new identity.

It makes sense that utilizing cryptographic signing with asymmetric keys could go a long way toward making authenticating to a service easier. On top of that, no shared credentials means there’s no credentials to potentially mis-handle. The entity simply stores your public key as the user identifier. No need to use some external account identifier that people can fake or steal, or get shut down on a whim. If the service needs to use email, or to send a text, they can still prompt you to supply that information in a domain outside authentication.

And then there’s the operational advantages of using public key cryptography for authentication. You don’t have to think about all the vagaries of implementing (or leasing) an authentication system. Nor do you have to think about how you’re going to safely and securely read, store, and transmit all the state objects required to underlie that system. You barely even have to run anything more complicated than an HTML page with a little JavaScript.

What does Public Key Authentication look like?

In a world of cryptographic identity, “authenticating” is as easy as:

-

Sending a plain-text “Authentication Message” to the entity attempting to authenticate.

-

The entity signs the message with their private key, and optionally says “Here’s my public key too”.

-

The authentication system “recovers” the public part of the key and compares it to the stated public key from the entity.

-

If they match, the user has “authenticated”, and the identity involved is the public part of the key.

Easy right? Well it is, but enabling this sort of interaction between a web browser something like a modern web application also needs standards and protocols that most people can agree on. It means that common classes of attacks or misconfigurations of the authentication parameters are documented and made difficult for developer to trip over.

It also means the user must be able to “create identities” on-demand and switch between them on-demand. There would need to be a common language, an API, that apps could talk to applications with, and know what kinds of responses they could expect.

Making this work between a web app and a key pair application running on an Android phone somewhere else in the world is another challenge that would require a whole other set of tools.

People are bad at public / private keys! Are they?

It should have also become clear at this point that as much as the public portion of the keys are a huge part of Public Key Cryptography, the private portion is equally, if not vastly more important. If I know the private part of your key and I can gain access to your encrypted messages, I can literally read everything.

I can also masquerade as you online by providing cryptographic proofs using your private key.

Historically, the mechanisms devised by programmers for managing key pairs were command line tools or very clunky desktop applications (that interacted with those command line tools). So there definitely have been a lot of examples with bad user interfaces, but just building a nice interface wouldn’t be enough, there would need to be a common protocol for those applications to communicate with other applications.

A Wallet in your browser, on your phone, and running on the blockchain

So those “applications” I’ve been talking about are almost universally called “Wallets” when a person interacts with them for the purposes of cryptocurrencies—and there is seemingly an endless array of them. They’re a critical part of this ecosystem because you must trust that the developer of a wallet application isn’t going to just take your private keys and run.

How do you determine if that’s the case? I don’t know, really 😅. Perhaps there are people out there who inspect all the code that comprises their open-source wallet and then go on to personally compile the binary themselves. In reality though, almost everyone relies on secondary sources of quality and trust; whether that source of trust is a long-time friend who’s been around the blockchain, the browser App Store marking the developer as “verified”, or if it’s listed on the Apple App Store.

There are many different flavors of wallets:

-

A custodial wallet is where a 3rd party completely manages the wallet and its keys. There may not even be a public key identity explicitly associated with your account , rather a large wallet with its own address, owned by the third-party that provides instant liquidity (a giant pool of currency) for you when you want to send cryptocurrency to another address.

-

A non-custodial wallet is where you have an application on your desktop, in a browser extension, or running on an Android / iPhone.

-

The application running on your device manages your private keys and hopefully keeps them safe. The private keys never leave your device, and you hope your device is super secure and there’s a reasonably small chance you’ll be targeted by nation-state actors.

-

There’s another kind of non-custodial wallet that runs on an application on a hardened, dedicated device. These can connect to your laptop or phone through various methods to interact with other applications.

-

-

A wallet that lives on the blockchain. These are sometimes called “Smart Wallets,” or multi-sig wallets. Smart wallets like Argent and Gnosis Safe where the wallet application is not running on any one device or server. Instead these products exploit the very nature of Ethereum to run on the blockchain itself. This is actually a pretty interesting idea.

Applications deployed to the blockchain are public. You can read the code for yourself and many times companies will upload verified source code, so you’re not left parsing through automatically decoded byte code.

And while the code implementing a contract is technically immutable due to the nature of the blockchain, it’s not perfect. Contracts can be written with “proxies” so that they can be upgraded and have bugs fixed. In practice, developers can introduce and remove code from the wallet as needed. The upside is that all of this is “public”, albeit a little obscure to track and understand.

This might sound like a lot, but it represents nothing less than an explosion in the ability for average folks to safely manage their cryptographic identities. That’s also not to say that these types of applications are perfect, but they are light years beyond what’s been historically available and are continually being updated and refined as the ecosystem grows.

Sign in With Ethereum aka EIP 4361

All of this brings us to a new protocol called “Sign in with Ethereum.” SIWE is formally defined in a proposal called EIP-4361 spearheaded by the nice folks over at Spruce ID. You should definitely check out their write up.

The fact that there is now a critical mass of individuals, and importantly, a critical mass of average individuals who can safely manage their key pairs, opens up a direct avenue to implementing public key authentication for the masses. This is exactly what “Sign in with Ethereum” intends to accomplish.

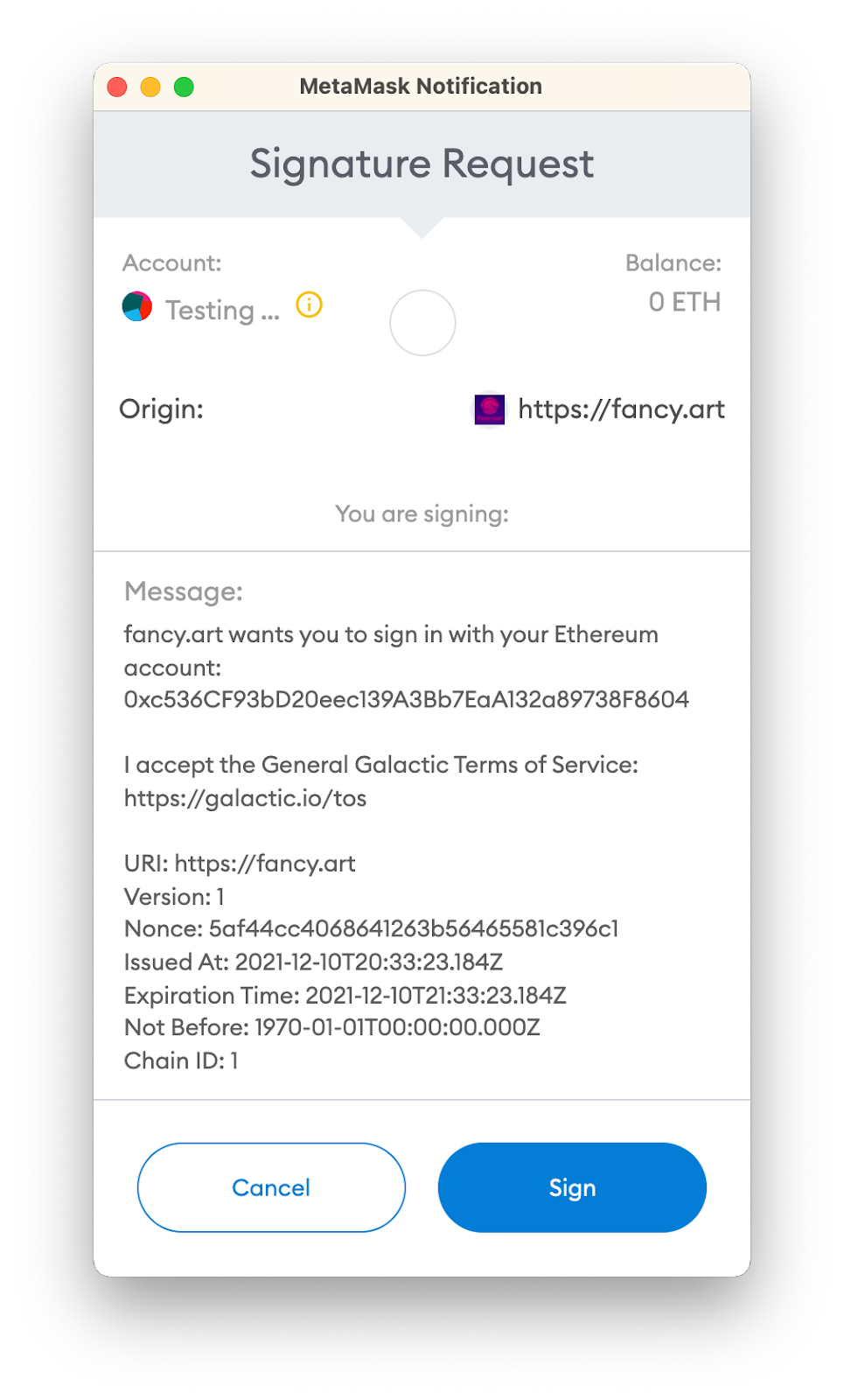

The mechanics of SIWE are not complicated, a developer can send a message to a wallet and request that it be signed with a private key. The wallet then delivers that message back to the application which can authenticate it by determining the identity (public key) of the signer. If it checks out, you’ve authenticated with no private information being shared.

All the channels for delivering and receiving this information are now in place with in-browser wallets such as Metamask or to on-device wallets with protocols like WalletConnect.

The protocols for signing such messages (EIP-191 and EIP-712) are in place.

Software libraries (web3.js, ethers.js, and literally hundreds more) are written to facilitate developers taking advantage of this nexus of technologies.

Undoubtedly there are still concerns and questions around application trust, developer trust, and private key security. Scams are, and will always be, rampant throughout the entire cryptocurrency ecosystem. But all these items coming together have enabled the best authentication scheme and identity system possible in a generation or more and it would be foolish to not at least consider it.

On our most recent project, Fancy.art, we have fully implemented Sign In With Ethereum and integrated with Google’s Firebase authentication system to allow individuals to fully authenticate to our app using nothing but their wallet.

It only took a couple hours to get it working, and a couple more days to turn it into a standalone open-source Javascript implementation we called eip4361-tools. Check it out and port it to your programming language of choice, Spruce has another independent implementation for Javascript as well.

Using our library, eip4361-tools

There are three steps to authenticating an entity via ethereum.

Step one: Signing a message

The first part is generating an authentication message that will be presented to the user’s, via a wallet application, for signing.

The format of the message is very specific, and it format is defined in the EIP-4361 proposal. The message contains a number of fields that are required and a number which are merely optional.

The mandatory fields are an ethereum address, the version of the message, a URI for scoping, a “nonce”, and an “issued-at” timestamp.

Why are these message fields mandatory? It all comes down to scoping and defeating a class of attacks called “replay attacks”.

Imagine that the login message only contained a static plaintext such as “Log Me In’’ and that I produced a signature of that plaintext using my private key. That signature would be valid, and my public key could totally be recovered from that signature.

Imagine, however, that this signature was intercepted by a third-party. Due to the nature of how hashing algorithms work, the attacker now knows the exact signature to produce for this static message that corresponds to your public key. The attacker could now lie to the application by saying they are you, and when challenged to produce the intercepted signature you sent to the app during your authentication session.

The approach to defeat these classes of attacks is to:

-

Include a “nonce” value. The key to a nonce, is that it has a unique value, is random enough and long enough to be practically unguessable, and that some mechanism is used to enforce a requirement that the nonce may not be used more than one time.

-

Additionally a nonce can be associated with an expiration time, bounding the amount of time a user (or attacker) has to produce a valid signature.

The final message looks something like this:

|

|

The key aspect of this message is that its content is scoped to a very specific action. That is, a specific account logging into a specific website at a specific time. Paired with a one-time use nonce, we can be very certain that the message is unique in the context of its usage and the time it was used.

Once we’ve constructed this message we then ask a wallet to produce a public key signature of this message.

The entirety of this step could happen entirely in the browser. However, for the sake of the nonce, it is very useful for message generation to occur in a context that can ensure the uniqueness of such nonces. For example, you might use any datastore with the ability to enforce uniqueness (redis, a filesystem, Firestore, MySQL) to store the nonce (and to subsequently mark it as having been used). Making this process opaque to the client (often a low-trust environment such as a browser) increases the security of the authentication system.

Step two: Produce a signature

The second step is the user’s wallet producing a signature. This step may happen in any number of ways, but a web developer will likely use a software library such as ethers.js or web3.js.

Firstly, the website must have already “connected” to the user’s wallet. This means that a user has authorized your code to interact with their wallet in a very limited capacity. This occurs in many different ways but the primary channels are direct in-browser communication with a wallet such as Metamask, or over an external protocol such as WalletConnect or WalletLink.

Once a connection has been made to a wallet, we can see the wallet’s public address, compose our login message, and then submit a request to the wallet. This type of request is defined in EIP-191 and is called personal_sign (web3 / ethers). We execute this request with our login message as the plaintext argument and the linked wallet will prompt the operator of the wallet whether or not they would like to perform the signing procedure:

Once the user selects to sign the message, the signature is generated and propagated back to the code that made the request.

Step Three: Verifying the signature

The final step is verifying that the received signature was indeed produced by the connected wallet. This step can occur completely in the browser as well, but in our case it takes place in a private context on a server (technically inside a Google Cloud Function).

In the simplest terms, since we know both the signature and the message that was supposedly signed, we can use a function called ecrecover (ethers / web3) that accepts these parameters and produces the public portion of the key that was used to produce the signature. This is complementary to the hash function used to produce the signature, which is called keccak-256.

There is a lot of math involved in how this works, if you’re truly interested in diving in.

The output of the ecrecover function is the public portion of the key that was used to sign the message. And so we can perform the verification of this signature by merely comparing this public key portion with the address of the connected wallet. If they are identical, then the user has successfully authenticated!

From this point forward you would proceed in your application in the same method as you would with any other authentication scheme. You might initialize an authenticated session using the browser’s cookies or local storage, or integrate into your platform of choice’s authentication system. We used Firebase’s Custom Authentication support.

In Conclusion

Public Key authentication is closer to the mainstream than it has ever been. For certain applications such as for interacting with the Ethereum blockchain, authenticating users with their wallet is a no-brainer. For the vast masses on the internet who don’t yet have an cryptocurrency wallet, public key authentication is still a bit off in the future.

What’s exciting though, is that an interoperable ecosystem of software and protocols that are bootstrapping themselves within the Ethereum world can be directly translatable to nearly any other domain. What remains is for key managements tools, outside of Ethereum wallets, be built into browsers (directly or with extensions like Metamask), or simply standalone desktop or mobile applications that communicate over WalletLink/WalletConnect